I am Huyu Wu (伍胡宇), a first-year Master’s student at the Institute of Computing Technology, Chinese Academy of Sciences (ICT). Currently, I am exploring the frontiers of Agentic Search as a Search Algorithm Intern at Xiaohongshu (RED).

My previous research background covers Dataset Distillation and Time Series Analysis, where I explored methods for efficient data representation and robust forecasting. I am always open to discussions and potential collaborations!

You can find my more research interests on

🔥 News

- 2025.10: 🎉🎉 One paper is accepted by Transactions on Machine Learning Research.

- 2025.10: 🎉🎉 I joined Xiaohongshu as a Research Intern to work on Agentic Search.

- 2025.09: 🎉🎉 One paper is accepted by Neural Networks.

📝 Publications

- Wu H, Su D, Hou J, Li G. Dataset Condensation with Color Compensation[J]. Transactions on Machine Learning Research, 2025. (First Author)

- Wu H, Jia B, Yuan X M. LLM-Led Vision-Spectral Fusion: A Zero-Shot Approach to Temporal Fruit Image Classification[J]. Neural Networks, 2025: 108155. (First Author, CCF-B)

- Jia B, Guo Z, Huang T, Guo F, & Wu H. A generalized Lorenz system-based initialization method for deep neural networks[J]. Applied Soft Computing, 2024, 167: 112316. (Last Author, 中科院一区TOP)

- Jia B, Wu H, Guo K. Chaos theory meets deep learning: A new approach to time series forecasting[J]. Expert Systems with Applications, 2024, 255: 124533. (Co-First Author, 中科院一区TOP)

- Wu H, Jia B, Sheng G. Early-Late Dropout for DivideMix: Learning with Noisy Labels in Deep Neural Networks[C]//2024 International Joint Conference on Neural Networks (IJCNN). IEEE, 2024: 1-8. (First Author, CCF-C)

📖 Educations

- 2025.09 – Present, M.S., Institute of Computing Technology, University of Chinese Academy of Sciences

- 2021.09 – 2025.06, B.S., College of Computer Science, Sichuan University

- 2020.09 – 2021.06, Business School, Business Administration, Sichuan University

💻 Internships

-

2025.10 - Present, Search Algorithm Intern at Xiaohongshu (Beijing)

-

2024.08 - 2025.09, Intern of TSAIL - Tsinghua University

- 2023.12 - 2024.04, Research Assistant of National University of Singapore - Institute of Operations Research and Analytics

- 2022.11 - 2024.08, Research Assistant of Sichuan University - Machine Intelligence Lab

📙 Projects

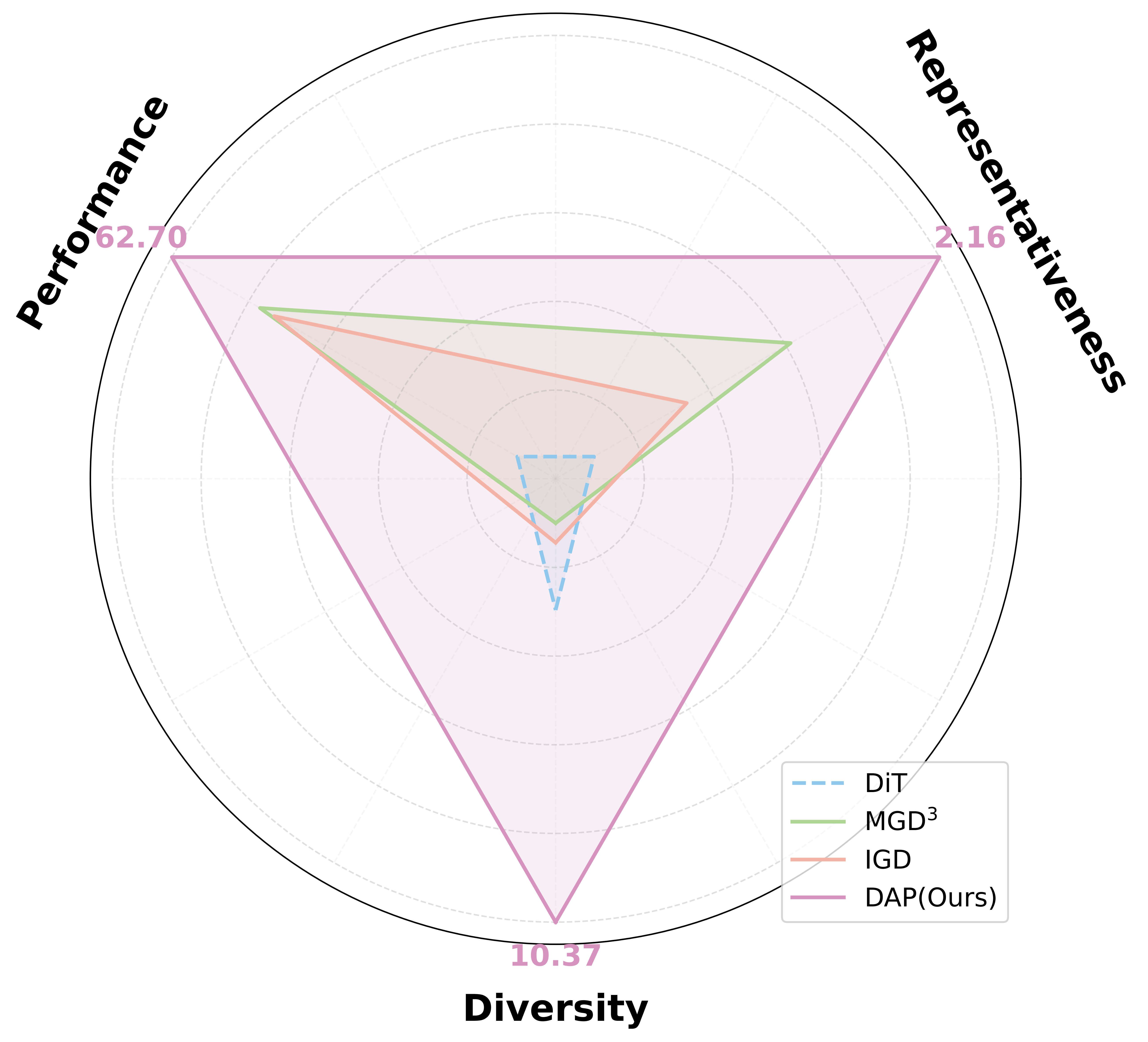

Diffusion Models As Dataset Distillation Priors

Dataset distillation seeks to create compact yet informative datasets, but balancing diversity, generalization, and representativeness remains challenging. We propose Diffusion As Priors (DAP), which leverages the inherent representativeness prior in diffusion models by quantifying synthetic–real data similarity in feature space with a Mercer kernel and guiding the reverse diffusion process without retraining.

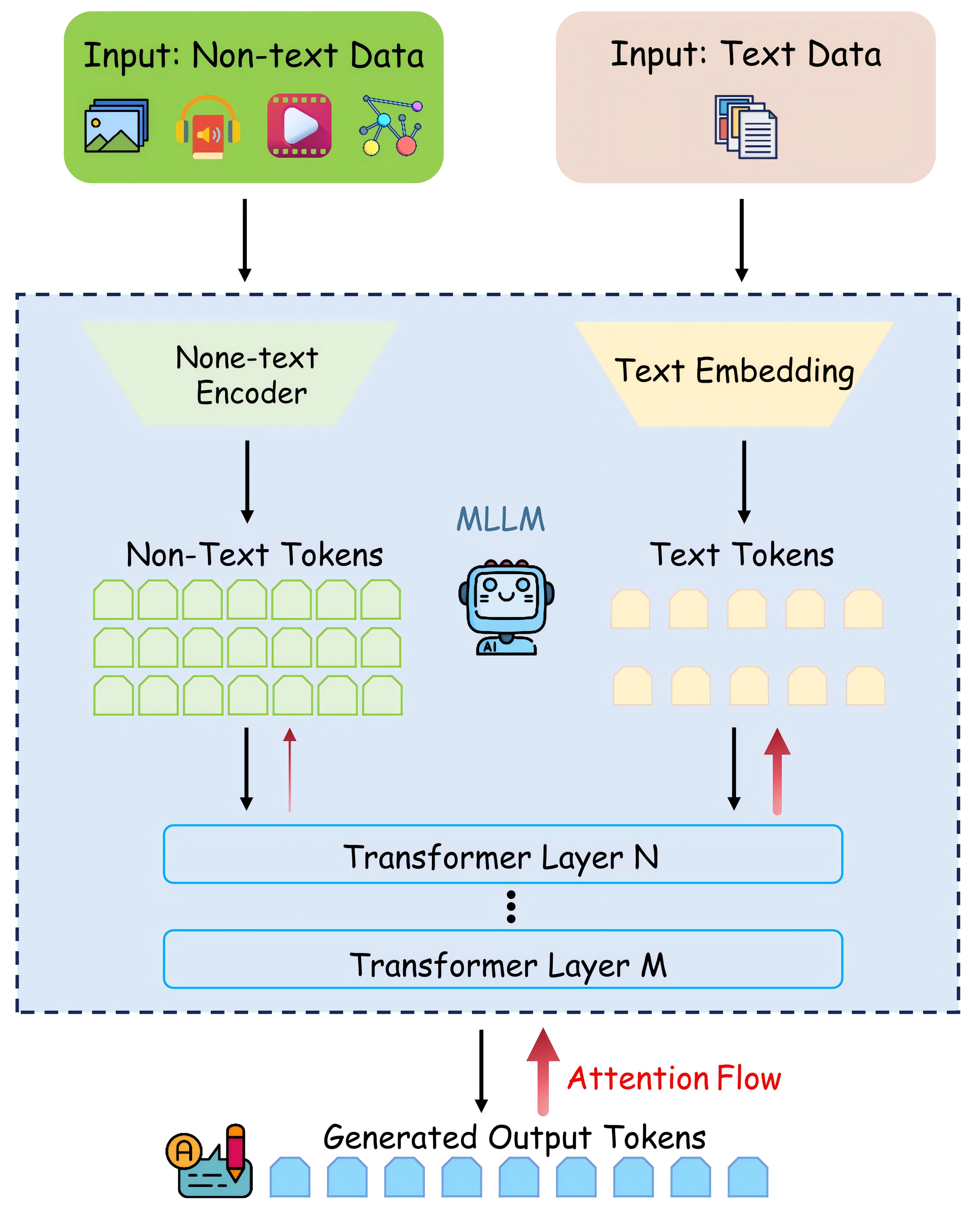

When Language Overrules: Revealing Text Dominance in Multimodal Large Language Models

Multimodal Large Language Models (MLLMs) achieve strong results but suffer from text dominance, where textual inputs outweigh other modalities. We present the first systematic study of this issue across images, videos, audio, time-series, and graphs, introducing two metrics—the Modality Dominance Index (MDI) and Attention Efficiency Index (AEI)—to quantify the imbalance. Our analysis reveals its pervasive nature and key causes, and we propose a simple token compression method that rebalances attention, reducing LLaVA-7B’s MDI from 10.23 to 0.86. This work offers insights and methods for building more balanced multimodal models.

VEX Robotics Competition

Led the school’s VEX Robotics club, responsible for programming and debugging of the robotic systems.

Attained Gold Awards at China Zone Selections, the Asia Championships, Asia Open and the World Championships in the United States during the 2018 season.

🎖 Honors and Awards

- 2024.10 Huang Qianheng Scholarship.

- 2023.06 Champion, 3rd Youth Campus Volleyball League of Sichuan Province.

- 2022.11 Second Prize, Asia-Pacific Undergraduate Mathematical Contest in Modeling.

- 2022.06 Third Prize, 2th Youth Campus Volleyball League of Sichuan Province.

📚 Academic Services

-

Conference Reviewer: IJCNN 2024, ACL 2024, CaLM @NeurIPS 2024, ICLR 2025, AAAI 2026

-

Journal Reviewer: IEEE Transactions on Neural Networks and Learning Systems(IEEE TNNLS)